LoRA fine-tuning

What’s LoRA

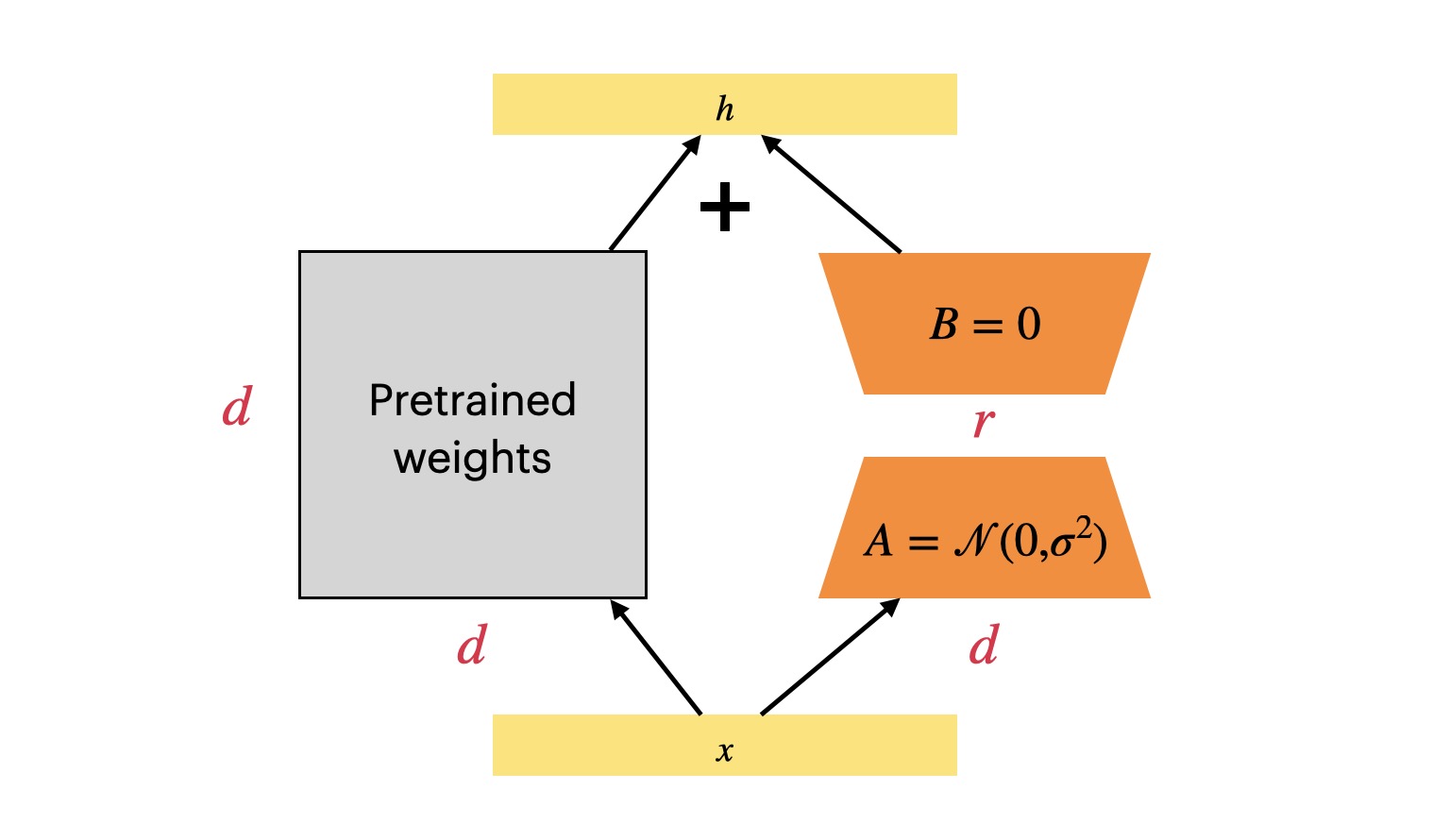

Since the era of LLM(large language model) arrived, fine-tuning LLM has become a challenge because the LLM models are extremely large, making it difficult to perform full fine-tuning. There are mainly two approaches: freeze the entire LLM and perform prompt tuning or In-context Learning; freeze the entire LLM but inserting trainable modules. Today, I will introduce the LoRA(Low-Rank Adaptation), which corresponds to the latter technical approach. This is a work proposed by the Microsoft team1